Paper accepted in Nature Communications and selected as one of the editors' picks

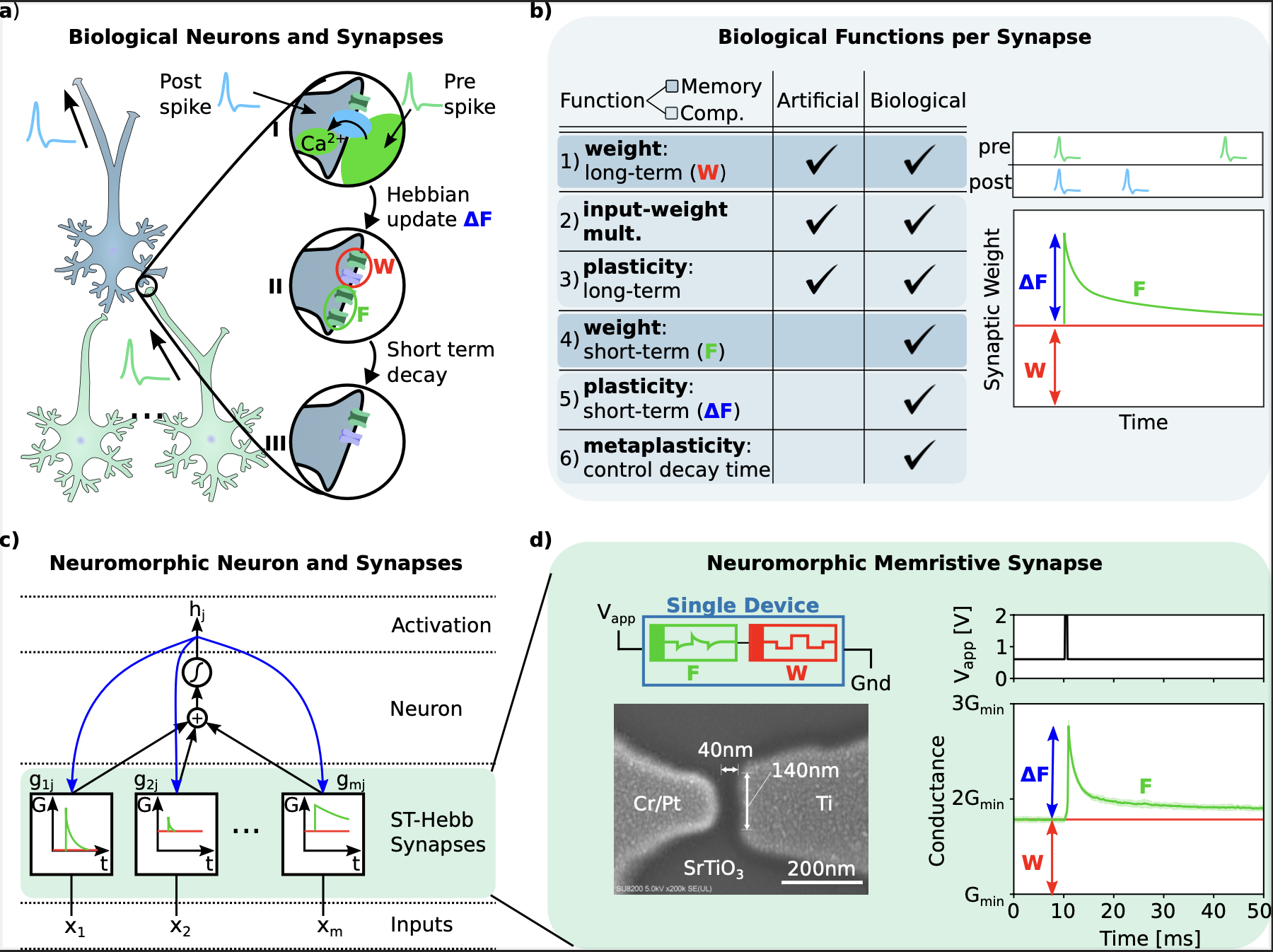

Christoph Weilenmann et. al. demonstrated novel nano-scale devices that inherently combine long- and short-term memory with synaptic computation.

The control over the dynamically evolving memory states enables meta-learning ("learning-to-learn") in new kinds of bio-inspired recurrant neural networks that use our devices as hardware synapses. Through simulation we show that such networks outperform traditional ones at complex machine learning tasks and that they run nearly 100x more energy efficiently on our hardware than on a state-of-the-art GPU.

Published in this month's issue of Nature Communications and selected by the editor as one of the 50 best papers recently published in the category "Devices"!

Link to the article: external page https://www.nature.com/articles/s41467-024-51093-3